The life cycle of Drosophila melanogaster lasts a couple of weeks, so the humble fruit fly is far more useful than a giant tortoise to a geneticist with a hypothesis and a deadline. Similarly, for AI researchers, chess has long been a useful testbed because it has clear rules but unfathomable depth.

And yet there is an incongruity. Compared with the breakneck development of computing, the game of chess remains reassuringly dependable, while the thing we see evolving in real time is AI itself. Not long after ChatGPT was first released, late in 2022, some people had fun making it play chess. Stockfish is the leanest, meanest chess engine there is, the apotheosis of decades of incremental improvement in one narrow niche. ChatGPT may be an apex predator in its own ecosystem, but pitting it against Stockfish is like watching a floundering lion fight a shark. The cascade of elementary blunders and illegal moves from ChatGPT showed its limitations.

In AI terms, 2022 was aeons ago. Artificial intelligence continues to improve in leaps and bounds, notwithstanding the uneven reception of the new GPT-5 earlier this month. ChatGPT faces fierce competition from other large language models (LLMs), including Claude, Gemini, Grok, DeepSeek and Kimi. Some excel at coding, while others will ace your homework essay. Researchers who wish to compare the strengths of these models have devised various benchmarks, rather like giving an SAT test to a chatbot. But why not use chess skill as a new form of standardised test?

Kaggle is an established online platform for data science competitions, owned by Google. The Kaggle Game Arena, where LLMs can compete using various games as a benchmark, is an innovation. To mark its launch, they organised a three-day exhibition chess tournament between the top LLMs. Let’s be clear – LLMs are out of their depth and, for the time being, the quality of play remains woeful. But at least a majority of the games ended in checkmate rather than in disqualification due to illegal moves.

Millions of chess games are available online, so presumably these models have ingested lots of notation as part of their training. Almost all the games began with the Sicilian defence (1 e4 c5), presumably because humans write about it.

LLMs are sometimes described as ‘auto-complete on steroids’. Their output has a veneer of coherence, but after some probing it becomes clear that their ‘mental model’ of the world is deficient, as when people discovered that some gave incorrect answers when asked how many r’s are in the word ‘strawberry’. (Though current models cope fine when I tested this.) So in LLM chess, the opening often makes sense, presumably because it is regurgitated, but serious errors show up later on. Nevertheless these games look like a huge step forward compared with a couple of years ago.

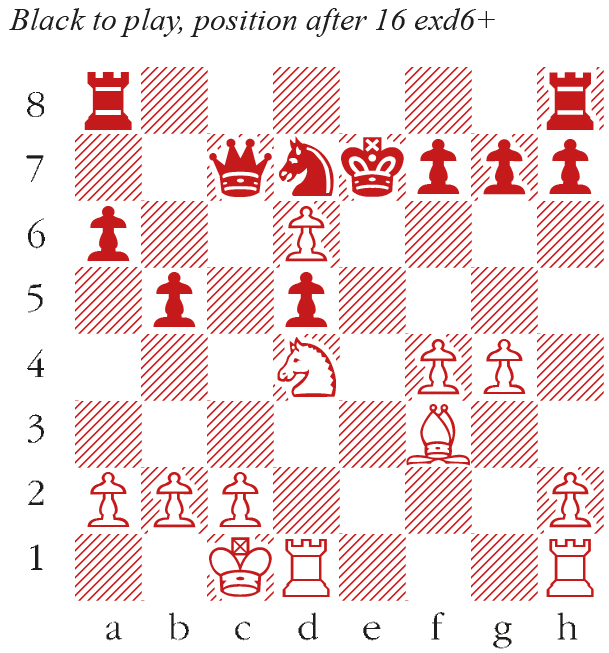

The following game was played in the final, in which OpenAI’s o3 model defeated Grok 4, developed by Musk’s xAI.

OpenAI o3-Grok 4

Kaggle Game Arena Exhibition Tournament, August 2025

1 e4 c5 2 Nf3 d6 3 d4 cxd4 4 Nxd4 Nf6 5 Nc3 a6 6 Bg5 e6 7 f4 Be7 8 Qf3 Qc7 9 O-O-O Nbd7 10 g4 b5 11 e5? Bb7 A nasty skewer, but o3 doubles down! 12 Bg2?? Just losing the queen. Bxf3 13 Bxf3 Nd5 14 Nxd5 exd5 15 Bxe7 Kxe7 16 exd6+ (see diagram) Qxd6?? 17 Nf5+ Ke6 18 Nxd6 Kxd6 19 Rxd5+ 19 Rhe1+ was stronger. Kc7 20 Rxd7+ Kxd7 21 Bxa8 Rxa8 22 Rd1+ Ke7 I’ll spare you the rest. Black resigned at move 54.

Comments