The new version of the Online Safety Bill seems, on the face of it, to be an improvement on the previous one. We’ll know more when it’s published – all we have to go on for now is a DCMS press release and some amendments moved by Michelle Donelan, the Digital Secretary and architect of the new Bill. The devil will be in the detail.

Let’s start with something that hasn’t got much coverage today, but which I think is important. Plans to introduce a new harmful communications offence in England and Wales, making it a crime punishable by up to two years in jail to send or post a message with the intention of causing ‘psychological harm amounting to at least serious distress’, have been scrapped.

That’s good news because, as Kemi Badenoch said in July, ‘We should not be legislating for hurt feelings.’

The bad news is, the new communications offence was intended to replace some of the more egregious offences in the Communications Act 2003 and the Malicious Communications Act 1988. The communication offences in the Malicious Communications Act are still going to be repealed, but not S127 of the Communications Act.

But this change does solve a problem I’d previously flagged up about the previous version of the Bill. It obliged the large social media platforms (Facebook, Instagram, Twitter, etc) to remove content in every part of the UK if it’s illegal in any part of the UK (‘offence means any offence under the law or any part of the United Kingdom’). So if it’s illegal to say something in Scotland, in-scope providers would have to remove it in England, Wales and Northern Ireland.

That created a problem because the new harmful communications offence would only have replaced some other communications offences in England and Wales, not in Scotland and Northern Ireland. So when it came to what you could and couldn’t say online, the new communications offence would not have replaced one set of rules prohibiting speech with another; it would have just added a new set.

That problem won’t arise now, and nor will social media platforms have to enforce the new speech restrictions in the Hate Crime and Public Order (Scotland) Act across the whole of the UK because the government amended the previous version of the Bill so providers won’t be obliged to remove speech that’s prohibited by laws made by a devolved authority.

Fears that Nicola Sturgeon might become the content moderator for the whole of the UK can be laid to rest – at least for the time being. It’s worth bearing in mind that a future Labour government could change that with a single, one-line amendment.

But the new version of the Online Safety Bill won’t criminalise saying something, whether online or offline, that causes ‘hurty feelings’, and we should be grateful for that.

Okay, now on to the main event.

Clause 13 of the Bill (‘Safety duties protecting adults’), which would have forced in-scope providers to set out in their terms of service how they intended to ‘address’ content that is legal but harmful to adults, has been scrapped.

How big a win is that?

Some of today’s papers are reporting this as removing any reference to ‘legal but harmful’ content from the Bill, but the phrase ‘legal but harmful’ has never appeared in any versions of this Bill. And, thanks to an amendment I and others lobbied for in July, the previous version of the Bill would have made it clear that one of the ways providers could ‘address’ this content would have been to do nothing.

The Times today says ‘the government has dropped plans to force social media and search sites to take down material that is considered harmful but not illegal’. But that’s not correct since it never planned to force sites to do that.

The objection to clause 13 was more nuanced. It was that if the government published a list of legal content it considered harmful to adults and imposed an obligation on in-scope providers to say how they intended to ‘address’ it, that would nudge them to remove it.

Even though the option to do nothing was available to providers in the previous version of the Bill, it would have been a brave social media company that said it would do nothing to ‘address’ this content, given that the government had designated it as harmful to adults.

Another objection to the previous version was that, according to its provisions, the list of legal content that was harmful to adults was going to be included in a statutory instrument and not in the Bill itself. Indeed, Nadine Dorries, then the Digital Secretary, published an ‘indicative list’ of what was going to be included and, among other horrors, it included ‘health and vaccine misinformation and disinformation’.

One concern free speech groups had is that after this list had been drawn up it could easily be added to by another statutory instrument, whether by this government or the next, creating an anti-free speech ratchet effect. That would have been a hostage to fortune.

In the new version of the Bill, the list of legal content that’s harmful to adults won’t be included in a supplementary piece of legislation, but on the face of the Bill, which will make it more difficult for future governments to enlarge. It’s not described as content that’s ‘legal but harmful’ to adults, but that’s essentially what it is. So you can ignore reports that the government has ditched this concept altogether. Rather, it has decided not to include the Index Librorum Prohibitorum in a statutory instrument but in the Bill itself.

In the DCMS press release, this lawful but awful content is defined as follows: ‘legal content relating to suicide, self-harm or eating disorders, or content that is abusive, or that incites hatred, on the basis of race, ethnicity, religion, disability, sex, gender reassignment or sexual orientation’. We can expect similar wording to appear in the new version of the Bill when it returns to parliament next week.

That’s almost identical to the ‘indicative list’ of legal but harmful adult content set out by Nadine Dorries, so reports of its death are greatly exaggerated. Although, credit where credit’s due, there’s no reference to ‘misinformation’ or ‘disinformation’ in the new Prohibitorum.

Another key difference is that instead of saying how they intend to ‘address’ this content in their terms of service, providers will have to say what tools they’re going to make available to their users who don’t want to be exposed to it. This is what Michelle Donelan means when she says in today’s Telegraph (with a fair amount of top spin), ‘I have removed “legal but harmful” in favour of a new system based on choice and freedom’.

The new system is definitely an improvement. Not because the previous version of the Bill would have forced social media companies to ban content that was legal but harmful to adults outright – that’s based on a misunderstanding – but because it applied pressure on them to do that.

The new version of the Bill is signalling to providers that if they want to make this content available to adult users in an unrestricted form they can, provided they supply their most sensitive users with tools to protect themselves from it – the social media equivalent of a ‘safe browsing’ mode. That’s better than the previous version. The new system empowers adult users to choose what content they’d like to see, with the state no longer nudging social media platforms into restricting legal but harmful content for everyone, regardless of their appetite for this material.

But I have some concerns about the ‘user empowerment’ system.

There’s a significant risk that most providers will make the ‘safe browsing’ mode their default setting. So if adult users want to see lawful but awful content they will have to opt in.

That may mean politically contentious content – saying transwomen aren’t women, for instance – will not be visible to users of the big social media platforms unless they’ve opted to dial down their safety settings. Why? Because the new Bill is telling providers they have to provide their most sensitive users with tools to protect themselves from, among other things, content that abuses or incites hatred towards people based on their protected characteristics (‘race, ethnicity, religion, disability, sex, gender reassignment or sexual orientation’). Woke activists will argue that a whole range of contentious views fall into that category and all users should be protected from them unless they switch their settings to ‘unsafe’, i.e. protected by default.

Defenders of the new system will argue that users will still have the option of adjusting their settings so they can see legal but harmful content – and that’s better than banning it outright, which the previous version of the Bill nudged providers to do. But some users won’t be aware they can adjust their settings in this way, or they will but won’t know how, or they won’t want to in case a woke colleague sees something ‘hateful’ over their shoulder and reports them to HR.

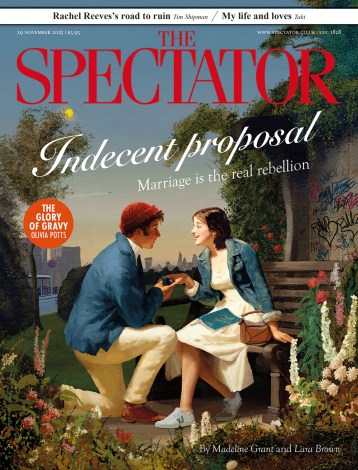

And what about politically contentious websites like The Conservative Woman (TCW) and Novara Media – perhaps even The Spectator? Will their content be judged ‘unsafe’ by providers and only made available to users who ‘opt in’? That sounds far-fetched, but TCW was blocked by default by the Three mobile network earlier this year.

When the Free Speech Union looked into it, we discovered it was because the British Board of Film Classification, to which Three had outsourced the job of deciding which websites were ‘safe’, had given TCW an 18 rating. So Three restricted access to the site for its users because its default settings excluded any websites the BBFC classed as only suitable for adults.

In light of this, it’s not unrealistic to think that under the new system politically contentious websites will be judged unsafe by the big social media platforms and banned by default (although not outright). And that will have a negative impact on their commercial viability. Not only will it limit their reach, it will make it harder for them to get advertising because most companies are concerned about ‘brand safety’ and won’t want to advertise on websites that are deemed unsafe by companies like Facebook. To a certain extent that happens already, but it could get significantly worse after this Bill becomes law.

In the previous version there were clauses making it more difficult for social media platforms to restrict ‘content of democratic importance’ and ‘journalistic content’, which were designed to mitigate the chilling effect of clause 13. It’s not clear how these will work in the context of the new ‘user empowerment’ model and I fear that providers will now be able to comply with these free speech duties by protecting this content for people who’ve dialled down their safety settings but not for those who’ve dialled them up. If that’s the case, it’s something that needs to be fixed as the Bill goes through parliament.

In one respect at least, free speech will be better protected in the new version. The previous one said providers would have ‘a duty to have regard to the importance of protecting users’ right to freedom of expression within the law’. That was pretty toothless since ‘have regard’ is the least onerous of the legal duties. In the new version, I’m told, that has been beefed up to ‘have particular regard’ which is better. (That’s something the Free Speech Union has been lobbying for.)

There are other free speech issues with the new Bill that Big Brother Watch has flagged up. But I think that, taken as a whole, it’s an improvement on the previous version. Michelle Donelan has had to steer a difficult path between those of us lobbying for more free speech protections and a vast array of groups petitioning her to make the Bill more restrictive, including factions within her own party. I don’t think she had the political room to do any more than she’s done and I don’t blame her for trying to spin the changes to the ‘legal but harmful’ provisions as more radical than they are.

Nevertheless, I still have concerns about the Bill and will be scrutinising it carefully when the new version is published. If, as I suspect, the duties to protect content of democratic importance and journalistic content have lost some of their force, I hope to work with parliamentarians in the Commons and the Lords to reinvigorate them.

Comments