Decades are happening in weeks in the world of artificial intelligence. A fortnight ago, OpenAI released GPT-4, the latest model of its chatbot. It passed the bar exam in the 90th percentile, whereas the previous model only managed the tenth. Last week, Google introduced its own chatbot, Bard. Now, the British government is announcing plans to regulate AI for the first time, as well as to introduce it into hospitals and schools. Even some of the biggest technophobes are having to grasp this brave new world.

We’re familiar with some of the technology by now, but we know little about the humans in the world of AI. From Steve Jobs to Bill Gates and Mark Zuckerberg, the previous generation of techies were household names, so well known that they were depicted by everyone from Harry Enfield to Jesse Eisenberg. But the chatbots seem to have come from the ether. Who makes them? Who willed them into existence? And who wants to stop them?

Sam Altman is the king in this world. He’s the CEO of OpenAI, the San Francisco lab behind ChatGPT and the image generator Dall-e. He’s 37 and looks like an AI-generated tech bro: hoodie, messy black hair, nervous eyes. He dropped out of a computer science course at Stanford, and became the CEO of a firm called Loopt at the age of 19 (raising $30 million, which is apparently nothing in venture-capital world). After that, he became a partner and eventually president of Y Combinator, a startup ‘accelerator’. It helped launch Reddit (which Altman was CEO of for eight days), Stripe, Airbnb, Dropbox and Coinbase.

Altman did all of this by 30. Then he started getting scared. He seemed to become convinced that a superintelligent AI could feasibly wipe out everything we hold dear. Altman wasn’t convinced that Google, leading the field at the time in its partnership with DeepMind, could be trusted. He thought it was too concerned about revenue and wasn’t doing enough to make sure its AI remained friendly. So he started his own AI company in December 2015, and seven years later we had ChatGPT.

But it was less than encouraging last month when its AI said it wanted to hack the nuclear codes. Researchers have said this was a ‘hallucination’ and an accident (and in all truth, it was coaxed into saying that by a New York Times journalist). But Altman has already said he’s ready to slow things down if people get nervous.

The question many are asking of Altman is: if you are so scared about AI wiping out humanity, why on earth are you building it? Altman said OpenAI was operating ‘as if the risks are existential’. But there’s a boyish sense of wonder to his mission: he wrote last month that he wants AI ‘to empower humanity to maximally flourish in the universe’. The upsides could be incredible: if AI helps humanity to become an interstellar species, some have calculated that it could bring the number of humans to exist in the future to 100,000,000,000,000,000,000,000,000,000,000,000 (yes, that’s 35 zeroes). No disease, no illness, no sadness: the AI of the future would know how to cure that. Altman basically thinks it’s worth the risk.

Altman wears some of his anxiety visibly. His voice trembles and his eyebrows do things. He once said that ‘AI will probably most likely lead to the end of the world, but in the meantime, there’ll be great companies’. The New Yorker asked him if he was scared about AI turning on us. ‘I try not to think about it too much’, he said. ‘But I have guns, gold, potassium iodide, antibiotics, batteries, water, gas masks from the Israeli Defence Force, and a big patch of land in Big Sur I can fly to’. In Siliconese, I believe that’s called ‘hedging’.

One person Altman has upset is Elon Musk. The world’s richest man was all aboard the OpenAI train when it was founded in 2015. But he left the board three years later. There’s some debate about why: the public explanation is that he was worried about conflicts of interest with his Tesla work. But there’s some reports that he didn’t like the way Altman was running things. Semafor claimed this weekend that Musk thought it was being outpaced by Google. So in 2018, he suggested that he take over OpenAI and run it himself. That idea was reportedly rejected by everyone else, including Altman, and Musk lashed out: he quit the company, and last year barred it from having access to Twitter’s data. Originally founded as a non-profit, OpenAI had to change tack after Musk’s billions dried up. Musk now complains about OpenAI on Twitter (which Altman has called him a ‘jerk’ for doing), and on Friday he said that ‘the most powerful tool that mankind has ever created’ is in ‘the hands of a ruthless corporate monopoly’.

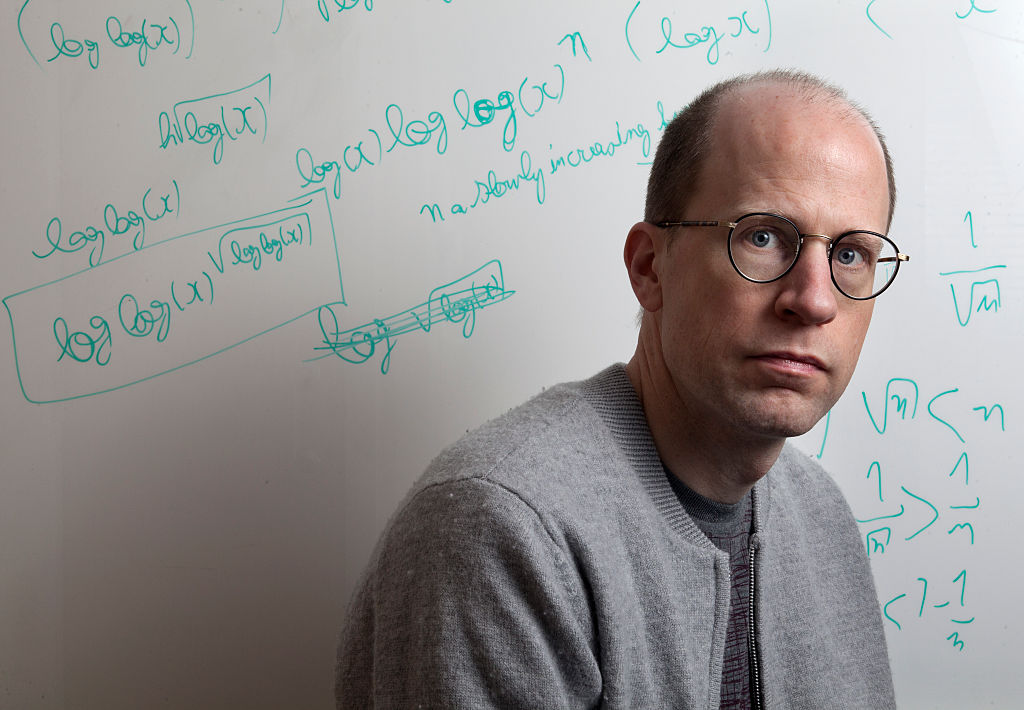

So Musk, whether out of concern or revenge, has started siding with the worriers. The most influential of these is Nick Bostrom, who is based at Oxford University’s Future of Humanity Institute. He made his name in the early 2000s when he suggested that we probably live in a computer simulation. After nestling that unsettling thought in our heads, he went on to work on cuddly topics like ‘global catastrophic risks’, and in 2014 published Superintelligence.

The book makes the most accessible argument yet for why people should be worried about AI. The problem, Bostrom says, is that its intelligence is nothing like ours. If you don’t tell it exactly what you want it to do, bad stuff happens. If I said to you ‘could you get me a coffee’, you would walk to the shop and mind pedestrians as you did so. If I said that to a badly ‘aligned’ AI, it might blitz everything in its path and bring back several metric tonnes of beans from Kenya. Bostrom’s example is called the ‘paperclip maximiser’. Imagine you’ve told a machine to make paperclips without specifying that you’d quite like us to all stay alive, thanks. After making paperclips for a while, the machine looks at you, and goes ‘you’re made of things I could make a paperclip out of’. It would soon start killing humans in its eternal quest to make as many clips as possible. We all die. It’s intelligence without common sense.

Taking this argument further is Eliezer Yudkowsky. He was warning us about superintelligence while George W. Bush was president. He recently went on a podcast and said ‘we’re all going to die’, and that we could do so within the next three years. When OpenAI was founded in 2015, he spent a night crying. When he was 20, he founded the Singularity Institute for Artificial Intelligence, intended to bring into being a utopia as quickly as possible. But over the 2000s, his thinking darkened, and he eventually set up the Machine Intelligence Research Institute (MIRI), which aims to stop AI from killing us. Yudkowsky seems to be giving up, though. MIRI last year announced that it was conceding defeat on AI safety after concluding that there was no surefire way to ‘align’ it, and it was switching to a ‘Death with Dignity’ strategy. The statement was released on April Fool’s Day, but most detected some sincerity. MIRI said we should accept that it’s lights-out, and try to have fun while we can.

Even though Yudkowsky is a celebrity in the AI world (he’s pictured here with Sam Altman and Grimes), he’s hardly a household name. Some – harshly – have said AI safety has been neglected because of ‘Eliezer refusing to get hotter’.

Yudkowsky communicates through the website LessWrong, where people really into existential risk, cognitive biases and rationality hang out. It’s been described as a cult, which it may or may be, but it’s no less influential either way. At its best, it’s a concentration of incredibly intelligent people trying to make the world better. At worst, it can become one of those over-philosophical places where people say it’s technically rational to have sex with a dog. It was Yudkowsky and the economist Robin Hanson who made LessWrong famous, but Hanson disagrees with Yudkowsky. He thinks we’ve got much more time, and that we should start worrying in 150 years, perhaps. His argument is somewhat obscure, but part of it is that these things, throughout human history, take longer than you expect to develop.

Peter Thiel thinks all these people are pathetic. The PayPal co-founder told students at Oxford in January that those worried about existential risk are themselves going to kill people. They’re slowing down technological progress, they’re slowing down the curing of diseases: they’re slowing down the world getting better. Thiel can’t stand stagnation. He’s like a 1950s kid whose dream didn’t come true. As he once said: ‘They promised us flying cars and all we got is 140 characters.’ Thiel’s philosophy is: ‘Go, go, go!’ Buckle up.

A final word on Google. Last week it released Bard, its own chatbot, and is now posing as the sensible one. The brains behind Bard came from DeepMind, founded by Demis Hassabis, Shane Legg and Mustafa Suleyman. They met at UCL in 2010, and within four years had sold their start-up to Google for $500 million. They then made AlphaGo, which in 2015 beat the European Go champion Fan Hui and got everyone very excited. Their biggest achievement was in 2020, when they ‘largely solved’ the structure of proteins in the body, a 50-year-old problem. DeepMind is quietly changing the world from the King’s Cross. It won’t come as a surprise to many Londoners that the apocalypse could start there.

Sam Altman is adamant that he has to race ahead to make the best AI, before anyone else does so recklessly. He’s got Google spooked: CEO Sundar Pichai declared a ‘code red’ when ChatGPT was released (in other words, hurry up!). The investment is pouring in, and slowing down doesn’t look too profitable. If we’re heading for a crash, we should probably check the drivers.

Comments