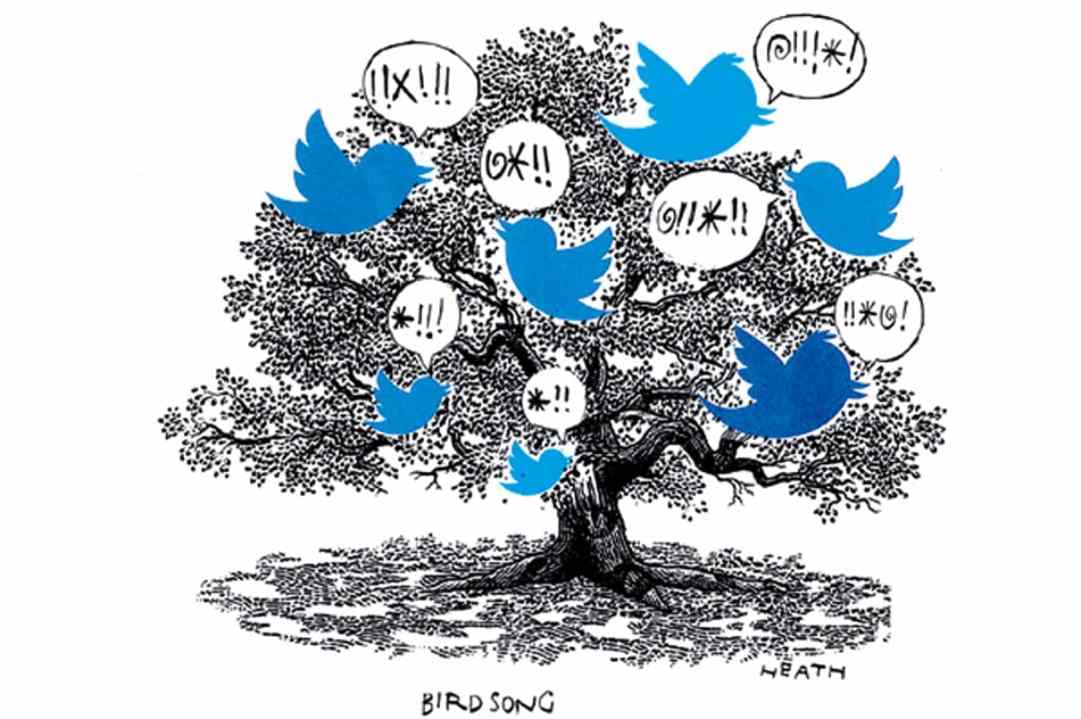

Algorithms dictate what we do – and don’t – see online. On Twitter and Facebook, they determine what posts do well and which ones get buried. Yet how they actually work is shrouded in secrecy. Elon Musk, who agreed a £34.5 billion takeover bid with Twitter’s board last month, has voiced his concern about algorithms: ‘I’m worried about (a) de facto bias in ‘the Twitter algorithm’ having a major effect on public discourse. How do we know what’s really happening?’ He is right to be alarmed.

‘Before you decide whether to publish, you have to think whether it will please the algorithm,’ says an anonymous social media editor who works at a 24-hour news channel. ‘If it doesn’t, it won’t perform well’.

Rather than serve content chronologically to people, social media companies deploy algorithms which prioritise or suppress content based on various factors. How many comments, shares and ‘likes’ a post receives can determine how well something is promoted on Twitter or Facebook. But there are concerns that the political and ideological preferences of the platforms may also be shaping what we see online. While Twitter and Facebook’s defenders insist this is not the case, there is no way of knowing for sure. Why? Because the algorithms remain a closely-guarded secret.

What is clear is that some topics tend to do better than others. Twitter users like me might have noticed that Extinction Rebellion posts fare well, while others about more politically troublesome issues, like immigration, tend to perform worse. Is this a coincidence? There is no sure way of knowing.

Facebook’s desire for meaningful interaction has resulted in content which courts controversy and division. Leaked research findings showed that Instagram and Facebook appear to know exactly how harmful their platforms are to teenage girls. This is perhaps no surprise: it could be argued that feelings of inadequacy are intrinsic to how the platforms work.

As another social media editor, who works at a high-profile media organisation tells me, stoking division is key to ‘gaming’ the algorithms.

‘If you know how algorithms work then you can exploit them,’ he says. ‘Content is designed to be divisive, and create an emotional response and confrontation. My job is to get the best results for my clients. I am judged by metrics and data. I am not there to make people feel better. My clients are interested in reach and sales. They don’t care whether people have a good day at the end of it.’

Yet while the government has made much fanfare out of its Online Safety Bill as a way of keeping Brits safe online, it appears to have missed an opportunity in addressing one of the most pressing problems on social media platforms: the secrecy behind how algorithms work.

The bill, which was mentioned in this week’s Queen’s Speech, says that online services should be held accountable for the design and operation of their systems. Most worryingly, the bill has suggested that regulators might be given oversight to audit and review algorithms.

Do we trust the UK government or Ofcom with that power? We have a Conservative government which has regulated how many people may meet up in private homes, whether a scotch egg justifies a pint in the pub, and how many metres we must stand away from each other. The full machinery of the state was deployed to censor counter-narrative views and to frighten the public into compliance during the Covid pandemic. The road to social media hell will likewise be paved with similarly good intentions.

The Online Safety Bill should present an opportunity to demand transparency, but the UK government appears to be reticent to crackdown. Nadine Dorries referred in the Commons to the Orwellian ‘disinformation and misinformation unit’ which talks to the social media companies regularly and asks them to remove content. This ‘unit’ comprises both the Counter Disinformation Cell and Rapid Response Unit, as detailed in my book, A State of Fear. Is this a reason for the government’s reluctance to demand more transparency when it comes to algorithms used by social media firms?

Rather than layer byzantine and subjective rules upon the internet, the government’s new regulation should instead force Big Tech to reveal to consumers how their websites really work. If social media is a public square, as Elon Musk has said, then it’s time to make the algorithms public.

Comments