As a member of Sage, I support public scrutiny of our scientific advice. However, once our models enter the public domain they are interpreted in many different ways and some of the crucial context can be lost.

Modelling is an important tool for epidemic management, one that has been tested against numerous infectious disease outbreaks and continues to be improved. If we knew what the future held, decisions would be easy — but we don’t know what will happen. The alternative to using models is to guess. Models mean that the assumptions and data used are clear. It’s repeatable science. Guessing is not repeatable and relies on prejudices and wishful thinking, and changes from day to day. Nobody wants government policy to be based on guessing.

The critical problem for decision-making is that the future is unpredictable — models cannot predict numbers accurately. This is mostly because of behaviour that is often completely unpredictable. Back in June this year, we were working out what the number of admissions today would be. The answer depended on what we did between then and now, both individually and collectively and what decisions the government made — all still in the future at that point. Had England been knocked out of the Euros early then we might not have seen the same rates of increase in July. And the ‘pingdemic’ might not have happened.

But what we can do is to use models to construct scenarios — ‘what ifs’ — that can be used by governments to inform their decisions. If Plan B was to be introduced, and reduced transmission, then hospital admissions will likely go lower than otherwise, but models cannot predict exactly how much lower because it depends on booster shots, variants, weather and, most importantly, behaviour. Government has to balance the harms of transmission with the harms of restrictions.

SPI-M, the subcommittee of Sage that I chair, produces scenarios to inform government decisions, not to inform public understanding directly. They are not meant to be predictions of what will actually happen and a range of scenarios are presented to policymakers with numerous caveats and uncertainties emphasised. These can be misinterpreted in the media, where headlines often use the ‘worst case scenario’ alone and then later suggest we are ‘doomsters’ if that didn’t come to pass. But the media are not the primary audience of these models, though of course we want to do what we can to communicate with the media and the public.

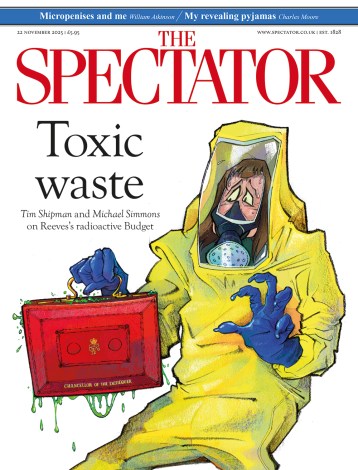

Media often like to compare previous ‘what if’ scenarios with what really happens, but that misses the point. One of The Spectator data tracker Sage scenarios vs actual outcome graphs presents a ‘what if we do not introduce more restrictions’ scenario with what really happened, ignoring the fact that new restrictions were introduced. It is misinterpretation to say that because the graphs don’t match, SPI-M modellers were wrong. It’s a bit like being commissioned to draw a picture of a cat that the owner plans to adopt but then, when they actually decide to adopt a dog, being told your picture is completely wrong: goalposts being shifted around long after the ball has been kicked.

Fewer restrictions and more vaccinations create uncertainties around human behaviour and immunology. We see this in the SPI-M scenarios for the summer of 2021 after the lifting of restrictions. Real Covid hospitalisations and hospital occupancy are, thankfully, currently at the bottom end of the range of scenarios we put forward for what might happen. SPI-M produced consensus scenarios at the start of September looking at the potential impact of transmission in schools. Again, numbers are below the lower scenario, which tells us we are in a better place than we might have been, but, crucially these graphs give planners and policymakers a guide for what to prepare for.

Modelling of epidemics is not exact, and we never suggested it was, and we are constantly testing, learning and improving. Decision-making under uncertainty is really hard, and modelling provides only one source of information. There will be economists and lobby groups feeding into government to inform their decisions as well as Sage modelling.

The models are not perfect, although they are much improved during the course of the epidemic. They are very good at modelling what has already happened — we would not use models that cannot reproduce the past — but that does not solve the problem of predicting the future.

Comments